In an attempt to configure one or another of its functions, every Internet user has at least once seen the word "Unicode" written in Latin letters on the display. What is it, you will learn by reading this article.

Definition

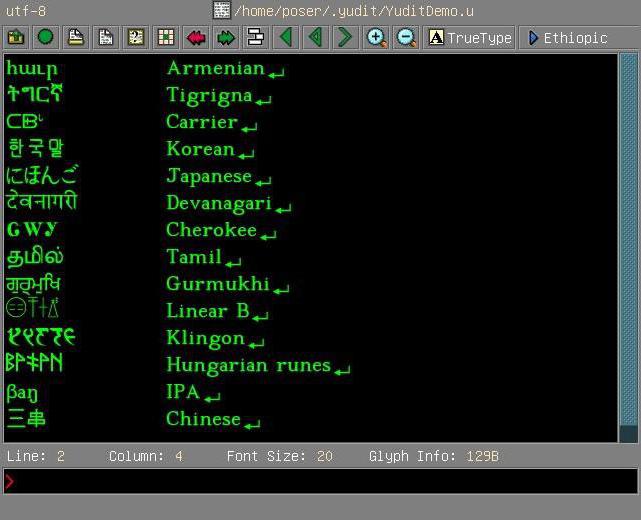

Unicode encoding is a character encoding standard. It was proposed by the nonprofit organization Unicode Inc. in 1991. The standard is designed to combine as many different types of characters as possible in one document. A page that is based on it can contain letters and characters from different languages (from Russian to Korean) and mathematical signs. In this case, all characters in this encoding are displayed without problems.

Reasons for creating

Once upon a time, long before the advent of the Unicode unified system, the encoding was chosen based on the preferences of the author of the document. For this reason, it is often necessary to use different tables to read one document. Sometimes it had to be done several times, which greatly complicated the life of an ordinary user. As already mentioned, the solution to this problem in 1991 was proposed by the non-profit organization Unicode Inc., which proposed a new type of character encoding. He was called to combine obsolete and diverse standards. Unicode is an encoding that made it possible to achieve the unthinkable at that time: create a tool that supports a huge number of characters. The result exceeded many expectations - documents appeared that simultaneously contained both English and Russian text, Latin and mathematical expressions.

But the creation of a single encoding was preceded by the need to resolve a number of problems that arose due to the huge variety of standards that already existed at that time. The most common of them:

- elven letters, or "krakozyabry";

- character set limitations;

- encoding conversion problem;

- duplication of fonts.

A small historical excursion

Imagine being in the 80s. Computer technology is not yet so widespread and has a look that is different from today. At that time, each OS was unique in its own way and finalized by each enthusiast for specific needs. The need for information exchange is turning into an additional refinement of everything in the world. An attempt to read a document created under another OS often displays an incomprehensible set of characters on the screen, and games with an encoding begin. It is not always possible to do this quickly, and sometimes the necessary document can be opened after six months, or even later. People who often exchange information create conversion tables for themselves. And here work on them reveals an interesting detail: you need to create them in two directions: “from mine to yours” and vice versa. The machine cannot make a banal inversion of calculations; for it, the source is in the right column, and the result is in the left column, but not vice versa. If there was a need to use any special characters in the document, they had to be added first, and then also to explain to the partner what he needed to do so that these characters did not turn into "crooked hairs". And let's not forget that for each encoding we had to develop or implement our own fonts, which led to the creation of a huge number of takes in the OS.

Imagine that on the font page you will see 10 pieces identical to Times New Roman with small marks: for UTF-8, UTF-16, ANSI, UCS-2. Do you now understand that the development of a universal standard was an urgent need?

“The Creator Fathers"

The origins of Unicode were to be found in 1987, when Jer Becker from Xerox, along with Lee Collins and Mark Davis from Apple, began research on the practical creation of a universal character set. In August 1988, Joe Becker published a draft proposal for a 16-bit international multilingual coding system.

A few months later, the Unicode working group was expanded to include Ken Whistler and Mike Kernegan of RLG, Glenn Wright of Sun Microsystems and several other specialists, which allowed us to complete the work on the preliminary formation of a unified coding standard.

general description

Unicode is based on the concept of a symbol. By this definition is meant an abstract phenomenon that exists in a specific form of writing and is realized through graphemes (their "portraits"). Each character is specified in Unicode with a unique code that belongs to a specific block of the standard. For example, grapheme B is in both English and Russian alphabets, but in Unicode 2 different characters correspond to it. A conversion to lowercase is applied to them , that is, each of them is described by a database key, a set of properties, and a full name.

Unicode Benefits

Unicode encoding was distinguished from other contemporaries by a huge supply of characters for “encrypting” characters. The fact is that its predecessors had 8 bits, that is, they supported 28 characters, but the new development already had 216 characters, which was a giant step forward. This allowed us to encode almost all existing and common alphabets.

With the advent of Unicode, there was no need to use conversion tables: as a single standard, it simply nullified their need. In the same way, the “crooks” have sunk into oblivion - a single standard made them impossible, as well as eliminating the need for duplicate fonts.

Unicode Development

Of course, progress does not stand still, and 25 years have passed since the first presentation. However, the Unicode encoding stubbornly holds its position in the world. In many ways, this became possible due to the fact that it became easily implemented and gained distribution, being recognized by the developers of proprietary (paid) and open source software.

At the same time, one should not assume that today the same Unicode encoding is available to us as a quarter century ago. At the moment, its version has changed to 5.x.x, and the number of encoded characters has increased to 231. They refused to use a larger margin of characters in order to still maintain support for Unicode-16 (encodings, where their maximum number was limited to 216). From the moment of its appearance to version 2.0.0, Unicode Standard has increased the number of characters that it included in almost 2 times. The growth of opportunities continued in subsequent years. By version 4.0.0, there was already a need to increase the standard itself, which was done. As a result, Unicode has acquired the form in which we know it today.

What else is there in Unicode?

In addition to the huge, constantly replenishing number of characters, Unicode-coding of textual information has another useful feature. We are talking about the so-called normalization. Instead of scrolling through the entire document character by character and substituting the corresponding icons from the correspondence table, one of the existing normalization algorithms is used. What are you talking about?

Instead of wasting computer resources on a regular check of the same character, which may be similar in different alphabets, a special algorithm is used. It allows you to make similar characters in a separate column of the lookup table and refer to them, and not just check all the data over and over again.

Four such algorithms have been developed and implemented. In each of them, the transformation takes place according to a strictly defined principle, which differs from the others, therefore it is not possible to name one of them as the most effective. Each was developed for specific needs, was implemented and successfully used.

Standard distribution

Over 25 years of its history, the Unicode encoding is probably the most widely used in the world. Programs and web pages are also adapted to this standard. The fact that Unicode today use more than 60% of Internet resources can talk about the breadth of application.

Now you know when the Unicode standard appeared. What is it, you also know and will be able to appreciate the full significance of the invention made by a team of Unicode Inc. over 25 years ago.