The term neural network, previously familiar only from science fiction books, has gradually and imperceptibly entered public life in recent years as an integral part of the latest scientific developments. Of course, for quite some time now, people involved in the gaming industry have known that this is a neural network. But nowadays, the term is found by everyone, the masses know and understand it. Undoubtedly, this indicates that science has become closer to real life, and in the future new breakthroughs await us. And yet, what is a neural network? Let's try to figure out the meaning of the word.

Present and future

In the old days, the neural network, Hort, and the people into space were closely related concepts, because you could only meet artificial intelligence with capabilities that far exceeded a simple machine in a fantasy world that arose in the imagination of some authors. And yet, the trends are such that lately around an ordinary person in reality there are more and more of those objects that were previously mentioned only in science fiction. This allows us to say that even the most turbulent flight of imagination, perhaps sooner or later will find its equivalent in reality. Books about fellow travelers, neural networks now have more in common with reality than they did ten years ago, and who knows what will happen in a decade?

The neural network in modern realities is a technology that allows you to identify people with only a photograph at their disposal. Artificial intelligence is quite capable of driving a machine, can play and win a game of poker. Moreover, neural networks are new ways to make scientific discoveries, allowing you to resort to previously impossible computing capabilities. This gives a unique chance to get to know the world today. However, only from news bulletins informing about the latest discoveries, it is rarely clear the neural network - what is it. Should this term be considered applicable to a program, a machine, or a set of servers?

General view

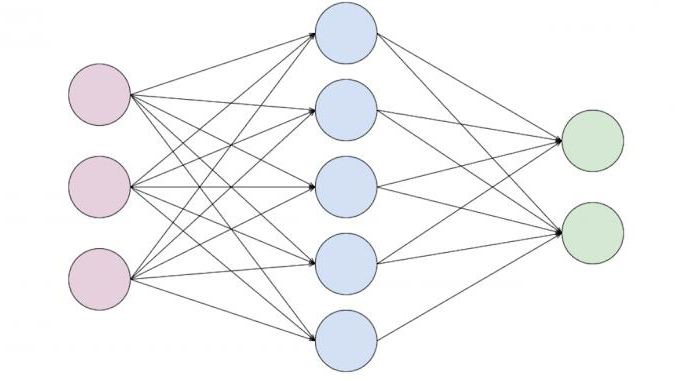

As you can see from the term “neural network” itself (the photos presented in this article also allow us to understand this), this is a structure that was constructed by analogy with the logic of the human brain. Of course, copying the completely biological structure of such a high level of complexity at the moment does not seem real, but scientists were already able to significantly approach the solution of the problem. Say, recently created neural networks are quite effective. Hort and other writers who published fantastic works were unlikely at the time of writing their works to know that science could already take this far forward this year.

The peculiarity of the human brain is that it is a structure of numerous elements between which information is constantly transmitted through neurons. In fact, new neural networks are also similar structures where electrical pulses provide the exchange of relevant data. In a word, just like in the human brain. And yet it is not clear: is there a difference from an ordinary computer? After all, the machine, as you know, is also created from parts, the data between which pass through an electric current. In books about space, neural networks, everything usually looks enchanting - huge or tiny machines, with one glance at which the heroes understand what they are dealing with. But in reality, while the situation is different.

How is it built?

As can be seen from the scientific works devoted to neural networks (“Hitlers into Space”, unfortunately, do not belong to this category, no matter how fascinating they may be), the idea is in the most progressive structure in the field of artificial intelligence, in creating a complex structure, some parts of which very simple. In fact, drawing a parallel with humans, one can find a similarity: for example, only one part of the brain of a mammal does not have great abilities, capabilities, and cannot provide reasonable behavior. But when it comes to the person as a whole, such a creature quietly passes the test for the level of intelligence without any special problems.

Despite these similarities, a similar approach to the creation of artificial intelligence was ostracized several years ago. This can be seen both from scientific works and from science fiction books about the neural network ("Hitanians into Space", mentioned above, for example). By the way, to some extent, even Cicero’s remarks can be connected with the modern idea of neural networks: at one time he rather caustically suggested that monkeys throw letters written on tokens into the air so that sooner or later they would form a meaningful text. And only the 21st century showed that such malice was completely unjustified. Neural networks and fiction diverged in different ways: if the armies of monkeys give a lot of tokens, they will not only create a text rich in meaning, but they will also gain power over the world.

Strength is in unity, brother

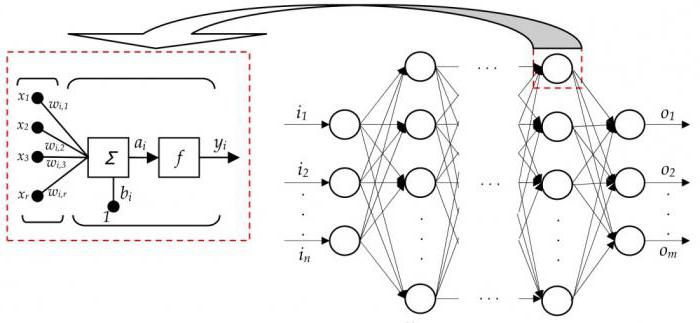

As it was learned from numerous experiments, training a neural network then leads to success when the object itself includes a huge number of elements. As scientists joke, in fact, a neural network can be assembled from anything, even from matchboxes, since the main idea is a set of rules to which the resulting community obeys. Usually, the rules are quite simple, but they allow you to control the process of data processing. In such a situation, the neuron (albeit artificial) will not be a device at all, not a complex structure or an incomprehensible system, but arithmetic operations, which are quite simple and can be realized with minimal energy consumption. Officially in science, artificial neurons are called perceptrons. Neural networks (“The Space Goers” illustrate this well) should be much more complicated in the view of some authors of scientific works, but modern science shows that simplicity also gives excellent results.

The work of an artificial neuron is simple: numbers are input, the value for each information block is calculated, the results are added up, the unit or value “-1” is formed at the output. Did the reader want to be among the fellow travelers at least once? In reality, neural networks work completely differently, at least at the present moment of time, therefore, when imagining yourself in a fantastic work, you should not forget about it. In fact, a modern person can work with artificial intelligence, for example, like this: you can show a picture, and the electronic system will answer the question “either - or”. Suppose that a person gives the system the coordinates of one point and asks what is shown - the earth or, say, the sky. After analyzing the information, the system gives an answer - quite possibly, incorrect (depends on the perfection of AI).

Finger to the sky

As can be seen from the logic of the modern neural network, each element of it is engaged in trying to guess the correct answer to the question asked by the system. Accuracy in this case is small, the result is comparable with the result of a coin toss. But real scientific work begins when the time comes to train the neural network. Space, the exploration of new worlds, the insight into the physical laws of our universe (which modern scientists rely on using neural networks) will become open at the very moment when artificial intelligence will be trained with much greater efficiency and effectiveness than humans.

The fact is that the person who asks the system a question knows the correct answer to it. So, you can write it in the program information blocks. The perceptron, who gave the correct answer, gains value, but the one who answered incorrectly loses it, receiving a fine. Each new program launch cycle is different from the previous one due to a change in value level. Returning to the previous example: sooner or later, the program will learn to clearly distinguish between where the earth is and where space is. The neural networks learn the more efficiently, the more correctly the study program is compiled - and its formation costs modern scientists considerable efforts. In the framework of the previously set task: if the neural network is provided with a different photo for analysis, it is likely that it will not immediately be able to process it accurately, but, based on the data obtained during the training, it will precisely figure out where the earth is, and where are the clouds, space or something else.

Application of the idea in reality

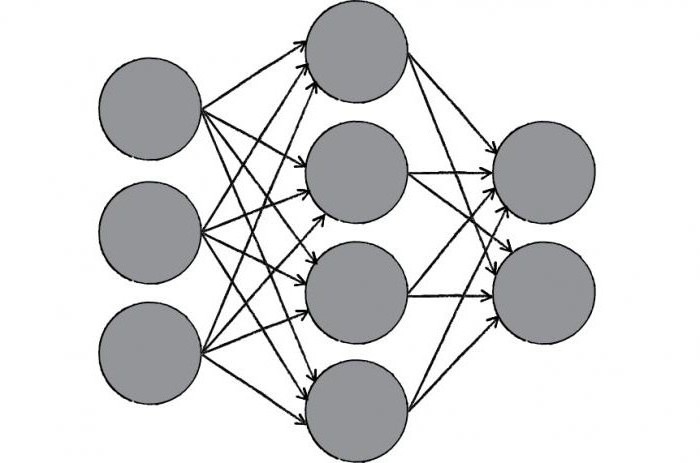

Of course, in reality, neural networks are much more complicated than those described above, although the principle itself is preserved. The main task of the elements from which the neural network is formed is to systematize numerical information. With a combination of the abundance of elements, the task becomes more complicated, since the input information may not be from outside, but from the perceptron, which has already completed its work on systematization.

If you return to the task above, then you can think of such processes inside the neural network: one neuron distinguishes blue pixels from the other, the other processes the coordinates, the third analyzes the data received by the first two, based on which it makes a decision about the earth or sky at a given point. Moreover, sorting into blue and other pixels can be entrusted to several neurons simultaneously, and summarize the information received by them. Those perceptrons that will give a better and more accurate result will receive a bonus in the form of greater value, and their results when reprocessing any task will be priority. Of course, the neural network is extremely voluminous, and the information processed in it will be an unbearable mountain, but it will be possible to take into account and analyze errors and prevent them in the future. In many respects, neural network-based implants present in many science fiction books work on this principle (unless, of course, the authors bother to think about the principle of work).

Milestones

This may surprise a layman, but the first neural networks appeared in 1958. This is due to the fact that the device of artificial neurons is similar to other computer elements, between which information is transmitted in the format of a binary number system. At the end of the sixties, a machine was invented, called the “Mark I Perceptron,” in which the principles of neural networks were implemented. This means that the first neural network appeared just a decade after the construction of the first computer.

The first neurons of the first neural network consisted of resistors, radio tubes (at that time, such a code that modern scientists could use) was not yet developed. Working with a neural network was the task of Frank Rosenblatt, who created a two-layer network. A screen with a resolution of 400 dots was used to transfer external data to the network. The machine was soon able to recognize geometric shapes. Already this allowed us to assume that, with the improvement of technical solutions, neural networks can learn to read letters. And who knows what else?

First neural network

As can be seen from the story, Rosenblatt literally burned his own business, orientated in it perfectly, was a specialist in neurophysiology. He was the author of a fascinating and popular university course, within which anyone could understand how to realize the human brain in a technical embodiment. Even then, the scientific community hoped that in the near future there would be real opportunities to form intelligent robots that can move, talk, and form similar systems. Who knows, maybe these robots would go to colonize other planets?

Rosentblatt was an enthusiast and can be understood. Scientists believed that artificial intelligence can be realized by fully embodying mathematical logic in a machine. At this point, the Turing test already existed , Asimov popularized the idea of robotics. The scientific community was convinced that the development of the universe is a matter of time.

Skepticism justified itself

Already in the sixties there were scientists who argued with Rosenblatt and other great minds who worked on artificial intelligence. A fairly accurate idea of their logic of fabrication can be obtained from the publications of Marvin Minsky, known in his field. By the way, it is known that Isaac Asimov and Stanley Kubrick spoke highly of Minsky’s abilities (Minsky helped him in his work on the Space Odyssey). Minsky was not opposed to the creation of neural networks, as Kubrick’s film testifies to, and as part of his scientific career, he was engaged in machine learning in the fifties. Nevertheless, Minsky categorically belonged to erroneous opinions, criticized hopes for which at that moment there was still no solid foundation. By the way, Marvin from the books of Douglas Adams is named after Minsky.

Criticism of neural networks and the approach of that time was systematized in the Perceptron publication, dated 1969. It is this book that many literally killed the interest in neural networks, because a scientist with an excellent reputation clearly showed that "Mark the First" has a number of flaws. Firstly, the presence of only two layers was clearly insufficient, and the machine knew too little, despite its gigantic size and huge energy consumption. The second point of criticism was devoted to the algorithms developed by Rosenblatt for training the network. According to Minsky, information about errors was highly likely to be lost, and the desired layer simply did not receive the full amount of data for a correct analysis of the situation.

Thing got up

Despite the fact that Minsky’s main idea was to point out mistakes to his colleagues in order to stimulate them to improve the development, the situation was different. Rosenblatt died in 1971, and there was no one to continue his business. In this period, the era of computers began, and this area of technology went forward with enormous strides. The best minds in the field of mathematics and computer science were involved in this sector, and artificial intelligence seemed an unreasonable scattering of forces and means.

Neural networks have not attracted the attention of the scientific community for more than a decade. A turning point occurred when cyberpunk came into fashion. It was possible to find formulas by which errors can be calculated with high accuracy. In 1986, the problem formulated by Minsky found the third solution (all three were developed by independent groups of scientists), and it was his discovery that prompted enthusiasts to develop a new field: work on neural networks was intensified again. However, the term perceptrons imperceptibly replaced cognitive computing, got rid of experimental devices, and began to use coding, using the most effective programming techniques. Just a few years, and neurons are already assembled into complex structures that can cope with quite serious tasks. Over time, it was possible, for example, to create programs for reading human handwriting. The first networks appeared, capable of self-learning, that is, they independently found the correct answers, without a hint from the person managing the computer. Neural networks have found application in practice. For example, it is on them that the programs used in banking institutions in America are used to identify numbers on checks.

Leap forward

In the 90s, it became clear that a key feature of neural networks that requires special attention of scientists is the ability to explore a given area in search of the right solution without prompting from a person. The program uses the trial and error method, on the basis of which it creates behavioral rules.

This period was marked by a surge in public interest in home-made robots. Enthusiastic designers from all over the world began to actively design their own robots capable of learning. In 1997, this showed the first truly serious success at the global level: for the first time, the computer beat the best chess player in the world - Garry Kasparov. However, by the end of the nineties, scientists came to the conclusion that they had reached the ceiling, and artificial intelligence could not grow further. Moreover, a well-optimized algorithm solves the same tasks much more efficiently than any neural network. Some functions remained behind neural networks, for example, recognition of archive texts, but nothing more difficult was available. Basically, as modern scholars say, there was a lack of technical capabilities.

Nowadays

Nowadays, neural networks are a way to solve the most complex problems using the “there is a solution by itself” method. In fact, this is not connected with any kind of scientific revolution, just modern scientists, the luminaries of the programming world, have access to powerful technology that allows them to put into practice what a person could only imagine in general terms before. Returning to Cicero’s phrase about monkeys and tokens: if you assign to the animals the one who will give them the reward for the correct phrase, they will not only create a meaningful text, but write a new “War and Peace”, and no worse.

The neural networks of our day are in service with the largest companies working in the field of information technology. These are multilayered neural networks implemented through powerful servers, taking advantage of the World Wide Web, and the information arrays accumulated over the past decades.