If a person is engaged in the study of computer technology not superficially, but rather seriously enough, he certainly must know about what forms of information are presented in a computer. This question is one of the fundamental, since not only the use of programs and operating systems, but also programming itself, in principle, is based precisely on these basics.

Lesson "Presentation of information in the computer": the basics

In general, computer technology by the way it perceives information or commands, converts them into file formats and gives the user a finished result, is somewhat different from generally accepted concepts.

The fact is that all existing systems are based on only two logical operators - “true” and “false” (true, false). In a simpler sense, it is yes or no.

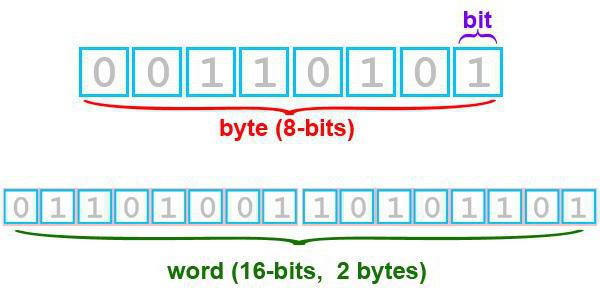

It is clear that computing technology does not understand the words, therefore, at the dawn of the development of computer technology, a special digital system was created with a conditional code in which one corresponds to the statement and zero to the statement. That is how the so-called binary representation of information appeared on the computer. Depending on the combination of zeros and ones, the size of the information object is also determined.

The smallest unit of measurement of the size of this type is a bit - a binary digit that can have a value of either 0 or 1. But modern systems with such small values do not work, and almost all methods of representing information in a computer come down to using eight bits at once, which in total make up a byte (2 to the eighth power). Thus, in one byte, you can encode any character out of 256 possible. And it is binary code that is the basis of the foundations of any information object. Further it will be clear how it looks in practice.

Informatics: the presentation of information in a computer. Fixed point numbers

Since we originally started talking about numbers, let us consider how the system perceives them. Presentation of numerical information in a computer today can conditionally be divided into the processing of numbers with fixed and floating point. The first type also includes ordinary integers with a zero after the decimal point.

It is believed that numbers of this type can occupy 1, 2 or 4 bytes. The so-called main byte is responsible for the sign of the number, while a positive sign corresponds to zero, and a negative sign corresponds to one. Thus, for example, in a 2-byte representation, the range of values for positive numbers is in the range from 0 to 2 16 -1, which is 65535, and for negative numbers it is from -2 15 to 2 15 -1, which is equal to the numerical range from -32768 to 32767.

Floating point representation

Now consider the second type of numbers. The fact is that the school curriculum on the topic "Presentation of information in a computer" (Grade 9) does not consider floating point numbers . Operations with them are quite complicated and are used, for example, when creating computer games. By the way, a little distracting from the topic, it is worth saying that for modern graphics accelerators, one of the main performance indicators is the speed of operations with these numbers.

It uses an exponential form in which the position of the comma can change. The following formula is accepted as the basic formula showing the representation of any number A: A = m A * q P , where m A is the mantissa, q P is the base of the number system, and P is the order of the number.

The mantissa must meet the requirement q -1 ≤ | m A | <1, that is, it must be a regular binary fraction containing a decimal digit, which differs from zero, and the order - an integer. And any normalized decimal number can be quite simply represented exponentially. And numbers of this type are 4 or 8 bytes in size.

For example, the decimal number 999,999 according to the normalized mantissa formula would look like 0.999999 * 10 3 .

Text data display: a bit of history

Most of all, users of computer systems still use test information. And the presentation of textual information in a computer complies with the same principles of binary code.

However, due to the fact that today you can count a lot of languages in the world, special encoding systems or code tables are used to represent textual information. With the advent of MS-DOS, CP866 was considered the main standard, and Apple computers used their own Mac standard. At that time, a special encoding of ISO 8859-5 was introduced for the Russian language. However, with the development of computer technology, new standards had to be introduced.

Varieties of Encodings

So, for example, in the late 90s of the last century, a universal Unicode encoding appeared , which could work not only with text data, but also with audio and video. Its feature was that not one bit, but two were allotted to one character.

Other varieties appeared a bit later. For Windows systems, CP1251 is the most used encoding, but for the same Russian language, KOI-8P is still used, an encoding that appeared back in the late 70s, and was actively used even in UNIX systems in the 80s.

The very presentation of textual information in a computer is based on the ASCII table, which includes the basic and advanced parts. The first includes codes from 0 to 127, the second - from 128 to 255. However, the first codes of the range 0-32 are allocated not to the characters that are assigned to the keys of the standard keyboard, but to the function buttons (F1-F12).

Graphic Images: Basic Types

As for graphics, which is actively used in the modern digital world, there are some nuances. If you look at the presentation of graphical information in a computer, you should first pay attention to the main types of images. Among them there are two main varieties - vector and raster.

Vector graphics are based on the use of primitive shapes (lines, circles, curves, polygons, etc.), text inserts and fills with a certain color. Raster images are based on the use of a rectangular matrix, each element of which is called a pixel. Moreover, for each such element, you can set the brightness and color.

Vector images

Today, the use of vector images has a limited scope. They are good, for example, when creating drawings and technical diagrams or for two-dimensional or three-dimensional models of objects.

Examples of stationary vector forms can be formats like PDF, WMF, PCL. For moving forms, the MacroMedia Flash standard is mainly used. But if we talk about the quality or performance of more complex operations than the same scaling, it is better to use raster formats.

Bitmap images

With raster objects, the situation is much more complicated. The fact is that the presentation of information in a matrix-based computer implies the use of additional parameters - the color depth (a quantitative expression of the number of colors in the palette) in bits, and the size of the matrix (the number of pixels per inch, denoted as DPI).

That is, the palette can consist of 16, 256, 65536 or 16777216 colors, and the matrices can vary, although 800x600 pixels (480 thousand dots) are called the most common resolution. By these indicators, you can determine the number of bits required to store the object. To do this, first use the formula N = 2 I , in which N is the number of colors, and I is the color depth.

Then the amount of information is calculated. For example, we calculate the file size for an image containing 65,536 colors and a matrix of 1024x768 pixels. The solution is as follows:

- I = log 2 65536, which is 16 bits;

- the number of pixels 1024 * 768 = 786 432;

- the amount of memory is 16 bits * 786 432 = 12 582 912 bytes, which corresponds to 1.2 MB.

Varieties of audio: the main directions of synthesis

The presentation of information on a computer, called audio, follows the same basic principles as described above. But, as well as for any other kind of information objects, their additional characteristics are also used to represent sound.

Unfortunately, high-quality sound and reproduction appeared in computer technology in the very last turn. However, while the reproduction was still somewhat complicated, the synthesis of a really sounding musical instrument was practically impossible. Therefore, some record companies have introduced their own standards. Today, FM synthesis and the tabular-wave method are most widely used.

In the first case, it is understood that any natural sound that is continuous can be decomposed into a certain sequence (combination) of simple harmonics using the sampling method and presenting information in the computer memory based on the code. The reverse process is used for playback, however, in this case, the loss of some components is inevitable, which is displayed on the quality.

In tabular-wave synthesis, it is assumed that there is a pre-created table with examples of sounding live instruments. Such examples are called samples. At the same time, MIDI (Musical Instrument Digital Interface) commands are often used for playback, which perceive the type of instrument, pitch, duration, intensity and dynamics of change, environment parameters and other characteristics from the code. Thanks to this, such a sound is close enough to natural.

Modern formats

If earlier the WAV standard was taken as the basis (in fact, the sound itself is presented in the form of a wave), over time it became very inconvenient, if only because such files took up too much space on the storage medium.

Over time, technologies have emerged to compress this format. Accordingly, the formats themselves have changed. The most famous today are MP3, OGG, WMA, FLAC and many others.

However, up to now, the main parameters of any sound file remain the sampling frequency (the standard is 44.1 kHz, although you can find values higher and lower) and the number of signal levels (16 bits, 32 bits). In principle, such a digitization can be interpreted as representing information in a sound-type computer based on a primary analog signal (any sound in nature is initially analog).

Video submission

If the sound problems were resolved quickly enough, then with the video everything went not so smoothly. The problem was that a clip, movie, or even a video game is a combination of footage and sound. It would seem, what is easier than combining moving graphic objects with a scale? As it turned out, this became a real problem.

The point here is that from a technical point of view, you should initially remember the first frame of each scene, called the key, and only then save the differences (difference frames). And, what is most sad, the digitized or created videos turned out to be of such a size that it was simply impossible to store them on a computer or removable media.

The problem was solved when the AVI format appeared, which is a kind of universal container consisting of a set of blocks in which arbitrary information can be stored, while even being compressed in different ways. Thus, even files of the same AVI format can differ significantly among themselves.

And today you can find quite a few other popular video formats, however, all of them also use their own indicators and parameter values, the main of which is the number of frames per second.

Codecs and Decoders

It is impossible to imagine the presentation of information on a computer in terms of video without the use of codecs and decoders used to compress the initial content and decompress during playback. Their very name suggests that some encode (compress) the signal, while others - on the contrary - unpack.

They are responsible for the contents of containers of any format, and also determine the size of the final file. In addition, the resolution parameter plays an important role, as indicated for raster graphics. But today you can even find UltraHD (4k).

Conclusion

To summarize the above, we can only note that modern computer systems initially work exclusively on the perception of binary code (they simply do not understand the other). And its use is based not only on the presentation of information, but also on all the programming languages known today. Thus, initially, in order to understand how all this works, you need to delve into the essence of applying sequences of ones and zeros.