Compression is a special case of encoding, the main characteristic of which is that the resulting code is smaller than the original. The process is based on the search for repetitions in the information series and the subsequent storage of only one next to the number of repetitions. Thus, for example, if a sequence appears in a file as “AAAAAA”, occupying 6 bytes, it will be saved as “6A”, which occupies only 2 bytes, in the RLE compression algorithm.

Process history

Morse code invented in 1838 is the first known case of data compression. Later, when mainframe computers began to gain popularity in 1949, Claude Shannon and Robert Fano invented coding, named after the authors - Shannon-Fano. This encryption assigns codes to symbols in a probability theory data set.

Only in the 1970s, with the advent of the Internet and online storage, were full-fledged compression algorithms implemented. Huffman codes were dynamically generated based on input information. The key difference between Shannon-Fano coding and Huffman coding is that in the first, the probability tree was built from the bottom up, creating a suboptimal result, and in the second from top to bottom.

In 1977, Abraham Lempel and Jacob Ziv published their innovative LZ77 method, using a more modernized vocabulary. In 1978, the same team of authors published the LZ78 advanced compression algorithm. Which uses a new dictionary that analyzes the input data and generates a static dictionary, and not a dynamic one, like the 77 version.

Compression Forms

Compression is performed by a program that uses a formula or algorithm that determines how to reduce the size of the data. For example, imagine a string of bits with a smaller string of 0 and 1, using a dictionary for transformations or a formula.

Compression can be simple. Such as, for example, deleting all unnecessary characters, inserting one repeating code to indicate a repeat line, and replacing a smaller bit string. The file compression algorithm can reduce the text file to 50% or significantly more.

For transmission, the process is performed in the transmission unit, including header data. When information is sent or received via the Internet, archived separately or together with other large files, it can be transmitted in ZIP, GZIP or other “reduced” format.

Advantage of compression algorithms:

- Significantly reduces memory footprint. With a compression ratio of 2: 1, a file of 20 megabytes (MB) will occupy 10 MB of space. As a result, network administrators spend less money and time storing databases.

- Optimizes backup performance.

- An important method of data reduction.

- Almost any file can be compressed, but it is important to choose the right technology for a specific file type. Otherwise, the files may be "reduced", but the overall size will not change.

Two types of methods are applied - lossless and lossy compression algorithms. The first allows you to restore the file to its original state without losing one bit of information with an uncompressed file. The second is a typical approach to executable files, text and spreadsheets, where the loss of words or numbers will lead to a change in information.

Lossy compression permanently removes data bits that are redundant, unimportant, or invisible. It is useful with graphics, audio, video and images, where the removal of some bits has virtually no noticeable effect on the presentation of the content.

Huffman Compression Algorithm

This process can be used to “reduce” or encrypt.

It is based on the assignment of codes of different bit lengths to the corresponding each repeated character, the more such repeats, the higher the compression ratio. To restore the original file, you need to know the assigned code and its length in bits. Similarly, use a program to encrypt files.

The procedure for creating data compression algorithms:

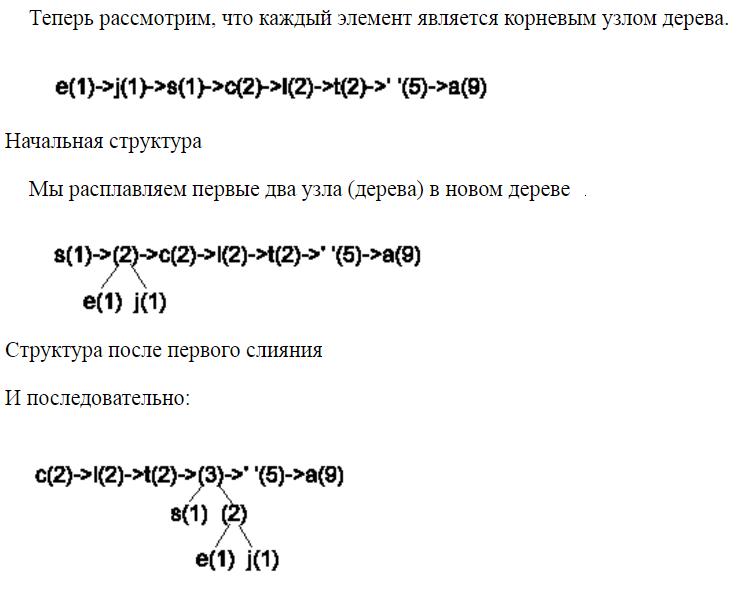

- They calculate how many times each character is repeated in the file to "reduce".

- Create a linked list with information about symbols and frequencies.

- Sort the list from smallest to largest depending on frequency.

- Convert each item in the list to a tree.

- Combine the trees into one, while the first two form a new one.

- Add the frequencies of each branch to a new tree element.

- Insert a new one into the desired place in the list, in accordance with the sum of the frequencies received.

- Assign a new binary code for each character. If the zero branch is taken, zero is added to the code, and if the first branch, one is added.

- The file is recoded according to the new codes.

- For example, the characteristics of compression algorithms for short text: "ata la jaca a la estaca"

- They calculate how many times each symbol appears and compose a linked list: '' (5), a (9), c (2), e (1), j (1), l (2), s (1), t ( 2)

- Order in frequency from lowest to highest: e (1), j (1), s (1), c (2), l (2), t (2), '' (5), a (9)

As you can see, the root node of the tree is created, then codes are assigned.

And it remains only to pack the bits in groups of eight, that is, in bytes:

01110010 | 11010101 | 11111011 | 00010010 | 11010101 | 11110111 | 10111001 | 10,000,000 |

0x72 | 0xd5 | 0xFB | 0x12 | 0xd5 | 0xF7 | 0xB9 | 0x80 |

Only eight bytes, and the source text was 23.

Demonstration of the LZ77 library

Let's consider the LZ77 algorithm using the example text “howtogeek”. If you repeat it three times, then it will change it as follows.

Then, when he wants to read the text back, he will replace each instance (h) with “howtogeek”, returning to the original phrase. This demonstrates a lossless data compression algorithm since the data that is entered matches the received data.

This is a perfect example when most of the text is compressed with just a few characters. For example, the word “this” will be condensed even if it appears in words such as “this,” “them,” and “this.” With repeating words, you can get huge compression ratios. For example, a text with the word "howtogeek" repeated 100 times has a size of three kilobytes, with compression it will take only 158 bytes, that is, with a 95% "decrease".

This, of course, is a rather extreme example, but it clearly demonstrates the properties of compression algorithms. In general practice, it is 30-40% with the ZIP text format. The LZ77 algorithm applies to all binary data, not just text, although the latter is easier to compress due to duplicate words.

Discrete cosine image conversion

Compressing video and audio works very differently. Unlike text, where lossless compression algorithms are performed, and data is not lost, images perform “reduction” with losses, and the more%, the more losses. This leads to those awful looking JPEG files that people have uploaded, exchanged, and taken screenshots several times.

Most pictures, photos and other things store a list of numbers, each of which represents one pixel. JPEG saves them using an image compression algorithm called discrete cosine transform. It is a combination of sinusoidal waves, folding together with different intensities.

This method uses 64 different equations, then Huffman encoding, to further reduce file size. Such an image compression algorithm gives an incredibly high compression ratio, and reduces it from a few megabytes to several kilobytes, depending on the required quality. Most photos are compressed to save download time, especially for mobile users with poor data transfer.

Video works a little differently than images. Typically, algorithms for compressing graphic information are used with what is called "inter-frame compression", which calculates the changes between each frame and saves them. So, for example, if there is a relatively still picture that takes several seconds in the video, it will be “reduced” to one. Inter-frame compression provides digital television and web video. Without it, the video would weigh hundreds of gigabytes, which is more than the average size of a hard drive in 2005.

Audio compression works very much like text and image compression. If JPEG removes details from an image that are not visible to the human eye, sound compression does the same for sounds. MP3 uses a bit rate ranging from a low of 48 and 96 kbps (lower limit) to 128 and 240 kbps (pretty good) to 320 kbps (high-fidelity sound), and you can only hear the difference with exceptionally good headphones. There are lossless compression codecs for sound, the main of which is FLAC and uses LZ77 encoding to transmit lossless sound.

Text Reduction Formats

The range of libraries for text mainly consists of a lossless data compression algorithm, except in extreme cases for floating point data. Most compressor codecs include LZ77, Huffman, and Arithmetic coding. They are used after other tools to squeeze a few percentage points of compression.

Runs of values are encoded as a character followed by a run length. You can correctly restore the original stream. If the length of the series is <= 2 characters, it makes sense to just leave them unchanged, for example, as at the end of the stream with "bb".

In some rare cases, they get additional savings by applying a transformation with lossy compression algorithms to parts of the content before applying the lossless method. Since files cannot be restored to their original state in these transformations, these “processes” are reserved for text documents. Which will not suffer from loss of information, for example, truncation of floating point numbers to only two significant decimal places.

Today, most text compression systems work by combining various data transformations to achieve maximum results. The meaning of each stage of the system is to perform the transform so that the next stage can continue effective compression. Summing up these steps gives a small file that can be restored without loss. There are literally hundreds of formats and compression systems, each of which has its pros and cons with respect to different types of data.

HTTP Schemas: DEFLATE and GZIP

Today, two widely used HTTP text compression algorithms are used on the Internet: DEFLATE and GZIP.

DEFLATE - Usually combining data using LZ77, Huffman coding. The gzip file uses DEFLATE internally, along with some interesting locks, filtering heuristics, header and checksum. In general, additional blocking and heuristics that use GZIP provide quality compression ratios than just one DEFLATE.

The next generation data protocols - SPDY and HTTP2.0, support header compression using GZIP, so most of the web stack will use it in the future.

Most developers simply download uncompressed content and rely on a web server to “reduce" data on the fly. This gives excellent results and an easy-to-use image compression algorithm. By default, many servers have GZIP installed with level 6, the highest level of which is 9. This is done intentionally, which allows servers to compress data faster due to a larger output file.

BZIP2 and LZMA formats, which create more compact forms than GZIP, which are unpacked faster.

LZMA can be considered a distant relative of GZIP. Both begin with the popular LZ vocabulary, followed by a statistical coding system. However, the improved LZMA ability to compress small files lies in its “advanced matching algorithms and LZ windows.

Document pre-processing

For high-quality compression, a two-stage process is performed:

- minimization stage;

- lossless stage.

Minification is a reduction in the size of data so that it can be used without processing, erasing a lot of unnecessary data in a file without changing it syntactically. So, it is safe to remove most of the spaces from Jscript, reducing the size without changing the syntax. Minification is performed during the assembly process. Either as a manual step, or as part of an automated assembly chain.

There are many programs that perform basic compression algorithms, among them:

- Winzip is a lossless storage format widely used for documents, images or applications. This is a fairly simple program that compresses each of the files individually, which allows you to restore each without having to read the rest. Thus increasing the productivity of the process.

- 7zip - A free manager for a very powerful and simplest information compression algorithm. Thanks to the 7z file format, which improves the ZIP standard by 50%. It supports the other most common formats, such as RAR, ZIP, CAB, GZIP and ARJ, so it does not create problems for use with any compressed file and integrates with the context menu in Windows.

- GZIP is one of the most famous compressors designed for Linux. Given the success on this platform, it has been finalized for Windows. One of the main advantages of gzip is that it uses the DEFLATE algorithm (a combination of LZ77 and Huffman coding).

- WinRAR - Compressor program and multifunctional data decompressor. This is an indispensable tool to save disk space and transfer time when sending and receiving files over the Internet or when backing up. It is used to compress all types of documents or programs so that they take up less disk space and can be stored or transferred over the network faster. This is the first compressor that implements 64-bit file management, which allows you to handle large volumes that are limited only by the operating system.

CSS minifiers

There are many CSS minifiers. Some of the options available include:

- Online CSS Minifier

- freeformatter.com/css-minifier;

- cleancss.com/css-minify;

- cnvyr.io;

- minifier.org;

- css-minifier.com.

The main difference between these tools depends on how deep their minification processes are. So, simplified optimization filters the text to remove unnecessary spaces and arrays. The developed optimization provides for the replacement of "AntiqueWhite" with "# FAEBD7", since the hexadecimal form in the file is shorter, and the forced use of the lower GZIP register character.

More aggressive CSS techniques save space, but may violate CSS requirements. Thus, most of them do not have the ability to be automated, and developers make a choice between size or degree of risk.

In fact, there is a new tendency to create other versions of CSS languages to more effectively help the code author as an added benefit, allowing the compiler to produce less CSS code.

Pros and Cons of Compression

The main advantages of compression are reduced hardware storage, data transfer time and communication bandwidth.

Such a file requires less memory, and the use of the method reduces the cost of disk or solid state drives. It requires less time to transmit at low network bandwidth.

The main disadvantage of compression is the effect on performance resulting from the use of CPU and memory resources for the process and subsequent unpacking.

Many manufacturers have developed systems to try to minimize the impact of resource-intensive computing related to compression. If it is executed before data is written to disk, the system can offload the process to save system resources. For example, IBM uses a separate hardware acceleration card to handle compression in some enterprise storage systems.

If data is compressed after it is written to disk or after processing, it is executed in the background to reduce the impact on machine performance. Although post-processing compression reduces the response time for each input and output (I / O), it still consumes memory and processor cycles and affect the number of operations that the storage system processes. In addition, since the data must initially be written to disk or flash drives in an uncompressed form, the saving of physical memory is not as great as with the built-in “reduction”.

The future is never defined, but based on current trends, some predictions can be made as to what might happen to data compression technology. Context mixing algorithms, such as PAQ and its variants, have begun to gain popularity, and they tend to achieve the highest "reduction" rates. Although usually they are slow.

With an exponential increase in hardware speed under Moore’s law, context mixing processes are likely to flourish. Since the cost of speed is overcome by fast equipment due to the high degree of compression. The algorithm that PAQ sought to improve is called Prediction of Partial Matching Paths. Or PPM.

Finally, the Lempel-Ziv-Markov chain algorithm (LZMA) invariably demonstrates an excellent compromise between speed and high compression ratio and is likely to create more new options in the future. It will be the leader because it has already been adopted in many competing compression formats, for example, in the 7-Zip program.

Another potential development is the use of compression using substring enumeration (CSE), which is a promising technology and does not yet have many software implementations.